Gambiaj.com – (KIGALI, Rwanda) – In a new development, a report by Clemson University’s Media Forensics Hub has revealed a coordinated online campaign promoting the Rwandan government’s projects and policies through the use of Large Language Models (LLMs) like ChatGPT and other AI tools. These methods have been employed to create mass-produced messages simulating genuine support on X (formerly Twitter) and to flood the platform with attacks on Rwanda’s critics.

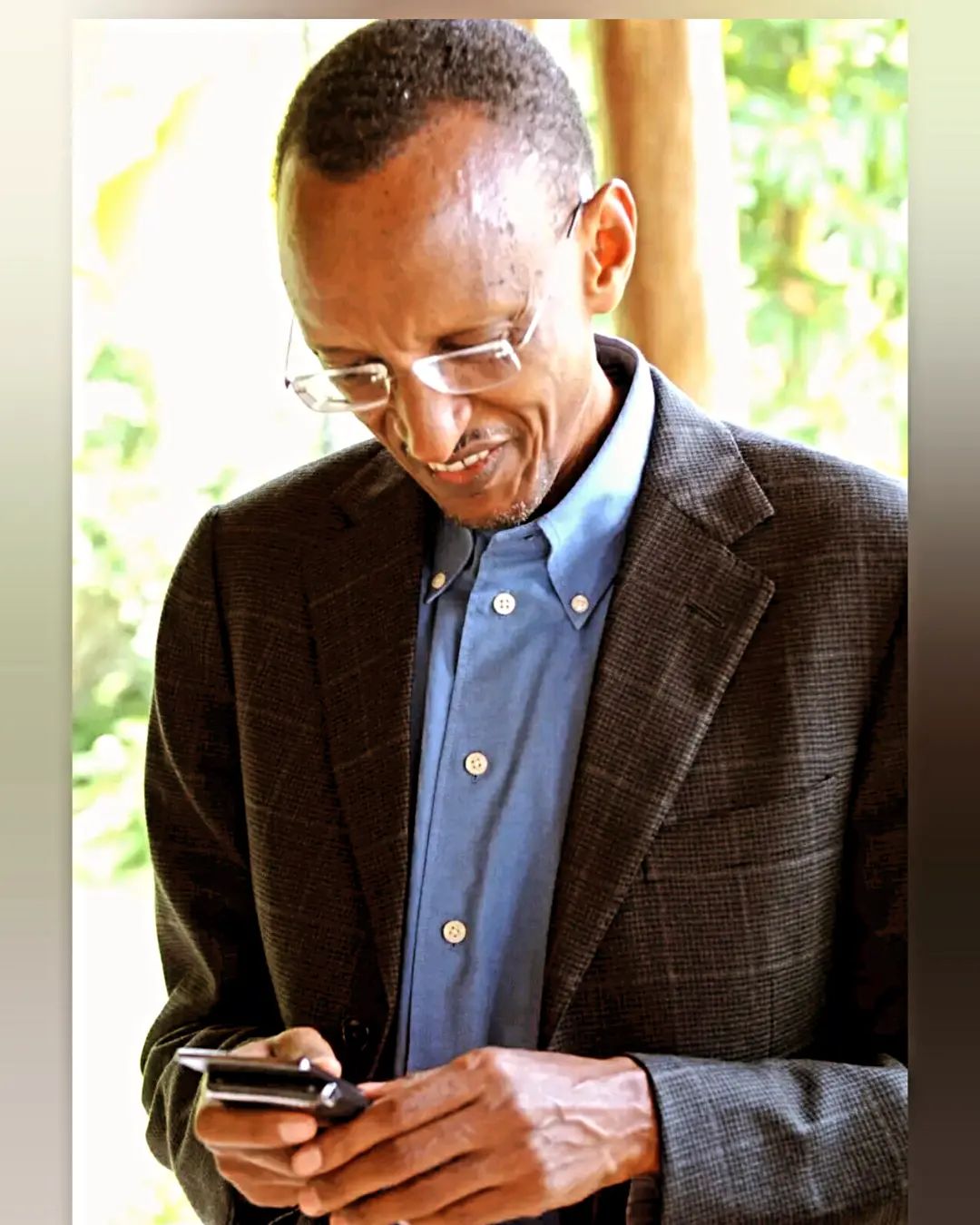

The campaign’s target audience spans the region and Europe, as well as citizens within Rwanda. The timing of this revelation is particularly significant, as Rwanda is set to hold elections on 15 July, where President Paul Kagame is expected to secure another landslide victory.

Despite X’s policy prohibiting the use of LLMs, no action has been taken against the suspect accounts. It remains unclear if these accounts are under investigation. Earlier reports from Africa Confidential highlighted Kigali’s collaboration with Jonathan Scott, a self-described ‘white hat hacker’, and the Milad Group, both of which have a history of involvement in cyber security operations.

Before the widespread use of LLMs, X employed techniques to flag and remove mass postings that echoed government talking points. However, the advent of LLMs has enabled pro-Kigali networks to produce messages with varied phrasing, making detection by X’s monitoring software more challenging. This innovation significantly reduces the time required to generate these messages while maintaining the core sentiment.

The report from Clemson University underscores the impact of this technology, with pro-Rwanda networks using LLMs to overshadow critics by sheer volume and generate seemingly independent support for Kigali. These efforts intensified following public controversies, such as Rwanda’s support of the M23 militia in eastern Congo-Kinshasa and the death of Rwandan journalist John Williams Ntwali, where pro-Rwanda accounts aimed to divert attention by highlighting government achievements, including sports sponsorships.

The research analyzed 464 accounts responsible for over 650,000 messages since the beginning of the year, many of which remain active. A significant portion of these messages supported Rwanda’s stance on the Congo-Kinshasa conflict, where Kagame is accused of military involvement and support for M23. These posts frequently accused the Kinshasa government of collaborating with the Hutu-led FDLR militia, linked to the Rwandan genocide.

Visual content, often combining generative templates with photoshopping, was also widely used. For example, the face of Rwandan presidential candidate Victoria Ingabire was superimposed on an image of a militia fighter. Additionally, AI-generated images depicted anti-Rwanda activists negatively.

The use of AI was exposed through errors, such as the accidental inclusion of ChatGPT commands and responses. One notable mistake included a prompt for 50 content ideas using the hashtag #ThanksPK.

The report highlights the structured nature of the campaign, with different posters focusing on specific themes, such as attacking the Burundian army’s alleged collaboration with the FDLR. Most in-network messages were posted during standard working hours, indicating the involvement of individuals with day jobs.

Despite the advanced tools used, the Clemson University researchers were not surprised by this campaign, given the Rwandan government’s history of digital propaganda. Analysts noted that removing accounts involved in coordinated behavior could impact the network’s ability to distract from criticisms and harm its reputation.